So here we are, consuming the final hours of the last scheduled test of the WLCG service. Next weeks we should be just making final preparations, waiting for the real data to arrive.

Overall, the Tier-1 services have been up and running basically 100% of the time. As the two more relevant issues, I would mention first the CMS skimming jobs brutal I/O. After they were limited (to 100 accoriding to CMS) they were still running until mid-thursday and sustaining a quite high load on the LAN (around 500MB/s). On wednesday 28-May evening, ATLAS launched a battery of reprocessing jobs which very fast filled up more than 400 job slots. Apparently all of these jobs read the detector Conditions data from a big (4GB) file sitting in dCache. This file of course fast became super-hot, since all of these jobs were trying to access it simultaneously. This caused the second issue of the week. The output traffic of the pool in which this file was sitting immediately grew up to 100MB/s, saturating the 1Gbps switch that (sadly, and until we manage to deploy the definitive 2x10Gbps 3Coms) links it to the central router. This network saturation caused the dcache pool-server control connetion to lose packets, which eventually hanged the pool.

At first sight it seems that the Local Area Network has been the big issue at PIC this CCRC08. Let's see what the more detailed post-mortem analysis teaches us.

Friday 30 May 2008

Friday 23 May 2008

LAN is burning, dial 99999

This has been an intense CCRC08 week. We have kept PIC up and running, and the performance of services has been mostly good. However, we have had some interesting issues from which I think we should try and learn some lesson.

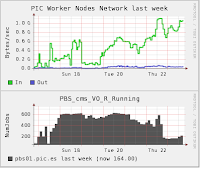

CMS has been basically the only user of the PIC farm this week, due to the lack of competition from other VOs. It was running about 600 jobs in parallel for some days. Rapidly, we saw how these jobs started to read input data from the dCache pools at a huge rate. By tuesday the WNs were reading at about 600MB/s sustained. Both the disk and the WNs switches were saturating. On thursday noon Gerard raised some of the Thumpers network uplink from 5 to 8 Gbps with 3 temporary cables crossing the room (yes, we will tidy them up once the so long awaited 10Gbps uplinks arrive) and we immediately saw how the extra bandwidth was immediately eaten up.

On thursday afternoon the WNs spent some hours reading at the record rate of 1200MB/s from disks. Then we tried to limit the number of parallel jobs CMS was running at PIC, and we saw that with only 200 parallel jobs it was already filling up 1000MB/s.

On thursday afternoon the WNs spent some hours reading at the record rate of 1200MB/s from disks. Then we tried to limit the number of parallel jobs CMS was running at PIC, and we saw that with only 200 parallel jobs it was already filling up 1000MB/s.

Homework for next week is to understand the characteristics of these CMS jobs (seems that are the "fake-skimming" ones). Which is their MB/s/job figure? (been asking the same question for years, now once again).

The second ccrc08-hickup this week arrived yesterday evening. ATLAS transfers to PIC disk started to fail. Among the various T0D1 atlas pools, there were two with plenty of free space while the others were 100% full. For some reason dCache was assigning transfers to the full pools. We have sent an S.O.S. to dCache-support and they answered immediately with the magic (configuration) recipe to solve this (thanks Patrick!).

Now looks like thinks are quiet (apart from some blade switches burning) and green... ready for the weekend.

CMS has been basically the only user of the PIC farm this week, due to the lack of competition from other VOs. It was running about 600 jobs in parallel for some days. Rapidly, we saw how these jobs started to read input data from the dCache pools at a huge rate. By tuesday the WNs were reading at about 600MB/s sustained. Both the disk and the WNs switches were saturating. On thursday noon Gerard raised some of the Thumpers network uplink from 5 to 8 Gbps with 3 temporary cables crossing the room (yes, we will tidy them up once the so long awaited 10Gbps uplinks arrive) and we immediately saw how the extra bandwidth was immediately eaten up.

Homework for next week is to understand the characteristics of these CMS jobs (seems that are the "fake-skimming" ones). Which is their MB/s/job figure? (been asking the same question for years, now once again).

The second ccrc08-hickup this week arrived yesterday evening. ATLAS transfers to PIC disk started to fail. Among the various T0D1 atlas pools, there were two with plenty of free space while the others were 100% full. For some reason dCache was assigning transfers to the full pools. We have sent an S.O.S. to dCache-support and they answered immediately with the magic (configuration) recipe to solve this (thanks Patrick!).

Now looks like thinks are quiet (apart from some blade switches burning) and green... ready for the weekend.

Friday 16 May 2008

Intensive test of data replication during the CCRC08 run2

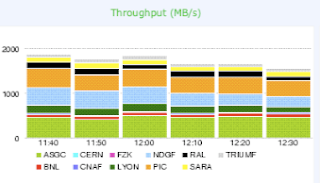

During the last three days, and within the CCRC08 (Common Computing Readiness Challenge ) run2, PIC performance was impressing importing data. The test scope was to replicate data among all the ATLAS Tier-1s (nine), each one had a 3TB subset of data which was replicated to every T1 according some shares, overall more than 16TB were replicated from all other T1s to PIC. We started the test on Tuesday morning and after 14 hours pic imported more than 90% of the data with impressive sustained transfers rates, moreover we reached 100% efficiency from 7 T1s and 80% for the other two. During the first kick, were all the datasets subscriptions were placed in bulk, we reached approximately 0.5GB/s of throughput with 99% efficiency (see plot number 1):

And after 4 hours the importing efficiency still maintained the 99% and the accumulated mean throughput was over the 300MB/s. This lead to the correct placement of 16TB data in 14 hours.

On the other hand we observed different behavior while exporting data to other T1s, the efficiencies are not so brilliant in comparison with the importing. Also notice that while exporting we rely on external FTS services and sometimes the errors are not related to our capabilities but we did see observe some timeouts from our storage system, network was indeed a limit but we observed saturation at pool level while importing but not when others are reading, this means that the network among the pools and the data receiver were not guilty of the efficiency dropping while exporting. We have an insight and seems that our dCache have problems returning the TURLs (transfer urls) when is under heavy load (well known dCache bug), this could cause the kind of errors observed during the exercise. Besides that, almost all the T1s got more than 95% of the data from PIC, but sometimes not at the first sting and the exercise can be considered more than successful either from the PIC side and from ATLAS.

In my opinion this was one of the most successful test ran so far within the ATLAS VO as they involved all T1s under a heavy load of data cross-replication, the majority of sites performed very well and there are a couple of them who experienced problems, let me remind again the complexity of the system which should have a bunch of services ready and stable in order to finalize every single transfer: ATLAS data management tools, SE, FTS, LFC, network,etc.

We have to keep working hard to achieve robustness of the actual system, which improved enormously in the last months: dCache is performing very well and the new DataBase back-end for the FTS ironed out some of the overload problems found in past exercises. For that reason I want to deeply thank all the people involved maintaining the computing structure at PIC, this results show we are in the correct way. Congrats !

And after 4 hours the importing efficiency still maintained the 99% and the accumulated mean throughput was over the 300MB/s. This lead to the correct placement of 16TB data in 14 hours.

On the other hand we observed different behavior while exporting data to other T1s, the efficiencies are not so brilliant in comparison with the importing. Also notice that while exporting we rely on external FTS services and sometimes the errors are not related to our capabilities but we did see observe some timeouts from our storage system, network was indeed a limit but we observed saturation at pool level while importing but not when others are reading, this means that the network among the pools and the data receiver were not guilty of the efficiency dropping while exporting. We have an insight and seems that our dCache have problems returning the TURLs (transfer urls) when is under heavy load (well known dCache bug), this could cause the kind of errors observed during the exercise. Besides that, almost all the T1s got more than 95% of the data from PIC, but sometimes not at the first sting and the exercise can be considered more than successful either from the PIC side and from ATLAS.

In my opinion this was one of the most successful test ran so far within the ATLAS VO as they involved all T1s under a heavy load of data cross-replication, the majority of sites performed very well and there are a couple of them who experienced problems, let me remind again the complexity of the system which should have a bunch of services ready and stable in order to finalize every single transfer: ATLAS data management tools, SE, FTS, LFC, network,etc.

We have to keep working hard to achieve robustness of the actual system, which improved enormously in the last months: dCache is performing very well and the new DataBase back-end for the FTS ironed out some of the overload problems found in past exercises. For that reason I want to deeply thank all the people involved maintaining the computing structure at PIC, this results show we are in the correct way. Congrats !

Thursday 15 May 2008

In April, a thousand rains

Following the spanish proverb "en abril, aguas mil" the rain finally fell on Catalunya in April after months of severe drought. For the PIC reliability metric, the rainy season started a bit earlier, in March, and looks as if it kept raining in April. In that month, our reliability result has been a yellowish (not green, not red) 90%. Sightly better than the one of March (...positive slope, this is always good news) but still below the 93% WLCG goal for Tier-1s. The main contribution to last month unreliability was, believe it or not, the network. The networking conspiration we suffered on the 28-29 of April is responsible for at least 8 out of the 10 reliability percentage points we lost last month. The non-dedicated backup described by Gerard hours ago will help to lower our exposure to network outages, but we should keep pushing for a dedicated one in the future.

We had also other operative issues last month which also contributed to the overall unreliability. Every service had its grey-day last month: in the Storage service, a gridftp door (dcgftp07 its name) misteriously hang in a funny way such that the clever-dCache could not detect it and kept trying to use it. As far as I know, this is still in the Poltergeist domain. I hope it will just go with the next dCache upgrade. For the Computing service there have been also some hickups... it is not nice when an automated configuration system decides to erase all of the local users in the farm nodes at 18:00 p.m.

We have even had a nice example of collaborative-destruction among Services last month: a supposedly rutinary and harmless pool replication operation in dCache ended up saturating one network switch which happened to have, among others, the PBS master connected to it, which immediately lost connectivity to all of its Workers. Was it sending the jobs to the data, or bringing the data to the CPUs? Anyhow, a nice example of Storage - Computing love.

We had also other operative issues last month which also contributed to the overall unreliability. Every service had its grey-day last month: in the Storage service, a gridftp door (dcgftp07 its name) misteriously hang in a funny way such that the clever-dCache could not detect it and kept trying to use it. As far as I know, this is still in the Poltergeist domain. I hope it will just go with the next dCache upgrade. For the Computing service there have been also some hickups... it is not nice when an automated configuration system decides to erase all of the local users in the farm nodes at 18:00 p.m.

We have even had a nice example of collaborative-destruction among Services last month: a supposedly rutinary and harmless pool replication operation in dCache ended up saturating one network switch which happened to have, among others, the PBS master connected to it, which immediately lost connectivity to all of its Workers. Was it sending the jobs to the data, or bringing the data to the CPUs? Anyhow, a nice example of Storage - Computing love.

First LHCOPN backup use at PIC

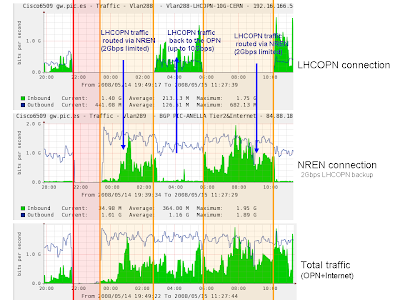

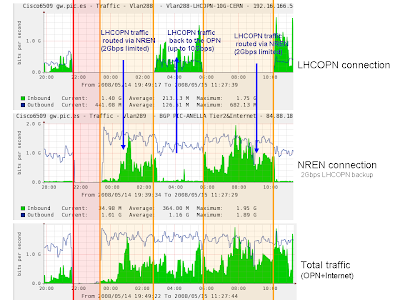

This is the first time PIC is online while fiber rerouting works are being carried out.

This night we've had a pair of network scheduled downtimes. The first one (red in the image) affected the PIC-RREN connection, this means a single 10Gbps fiber connection from PIC to Barcelona therefore, as scheduled, we had no connectivity from 21:35 to 23:20.

The second scheduled downtime was due to rerouting tasks at the French part of the PIC-CERN LHCOPN fiber. This used to be a critical task for us since we were isolated from the LHCOPN while tasks were taking place but it's not like this anymore. Now our NREN routes us through GÉANT independently on where data is going so we keep connected! As you can see in the image orange coloured areas, while reaching the OPN through our NREN (Anella+RedIRIS) we're limited to 2Gbps (our RREN uplink) but still reaching it so finally we can say we have a [not dedicated] backup link.

Waiting for the LHCOPN dedicated backup link won't be so hard now.

This night we've had a pair of network scheduled downtimes. The first one (red in the image) affected the PIC-RREN connection, this means a single 10Gbps fiber connection from PIC to Barcelona therefore, as scheduled, we had no connectivity from 21:35 to 23:20.

The second scheduled downtime was due to rerouting tasks at the French part of the PIC-CERN LHCOPN fiber. This used to be a critical task for us since we were isolated from the LHCOPN while tasks were taking place but it's not like this anymore. Now our NREN routes us through GÉANT independently on where data is going so we keep connected! As you can see in the image orange coloured areas, while reaching the OPN through our NREN (Anella+RedIRIS) we're limited to 2Gbps (our RREN uplink) but still reaching it so finally we can say we have a [not dedicated] backup link.

Waiting for the LHCOPN dedicated backup link won't be so hard now.

Monday 5 May 2008

Networking conspiration

Last thursday and friday (1st and 2nd May) we had a scheduled downtime for the yearly electrical maintenance of the building. Actually, this was the "easter intervention" that was moved in the last minute due to the unscheduled "lights off" that we had on the 13th March. Anyway, following what seems to be now a tradition, this time we had also a quite serious unscheduled problem just before our so-nicely-scheduled downtime. This time it was the network who caught us by surprise. On monday the 28th April, our Regional NREN had a scheduled intervention to deploy a new router to separate the switching and routing functionalities. We had been notified about this intervention. They told us we could see 5-10 minutes outages in a window of 4 hours.

In the end, the reality was that the intervention completely cut our network connectivity at 23:30 on the 28-Apr. The next morning, at 6:00, we recovered part of the service (the link to the OPN), but the n on-OPN connectivity was not recovered until 17:30 on the 29-Apr.

on-OPN connectivity was not recovered until 17:30 on the 29-Apr.

When one thinks on the network one tends to assume "it's always there". On that N-day we decided to challenge this popular belief, so we did not have just one network incident but two. Just four hours after we recovered the OPN link, somwhere near Lyon a bulldozer destroyed part of the optical fiber that links us to CERN. This kept our OPN link completely down from 10:00 a.m. 29-Apr until 01:00 30-Apr.

Not bad as an aperitive, hours before a two-day scheduled intervention...

In the end, the reality was that the intervention completely cut our network connectivity at 23:30 on the 28-Apr. The next morning, at 6:00, we recovered part of the service (the link to the OPN), but the n

on-OPN connectivity was not recovered until 17:30 on the 29-Apr.

on-OPN connectivity was not recovered until 17:30 on the 29-Apr.When one thinks on the network one tends to assume "it's always there". On that N-day we decided to challenge this popular belief, so we did not have just one network incident but two. Just four hours after we recovered the OPN link, somwhere near Lyon a bulldozer destroyed part of the optical fiber that links us to CERN. This kept our OPN link completely down from 10:00 a.m. 29-Apr until 01:00 30-Apr.

Not bad as an aperitive, hours before a two-day scheduled intervention...

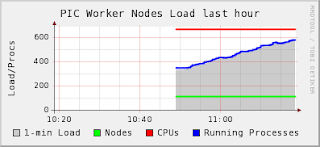

Throttling up

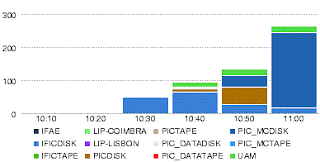

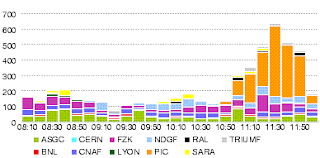

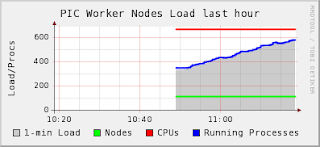

After the last power stop, it's the time for PIC to throttle up and catch up with the regular activity of the high demanding LHC experiments requirements. That is a good test also to show the ability of the services, either at the site or at CERN to achieve steady running after some days of outage. Concerning the two most important and critical services: the computing power and the data I/O, the nominal activity was reached extremely fast with a time gap of 30 minutes between the starting of the PIC pilot factory and the fact that more than 500 jobs were successfully running (pic.1):

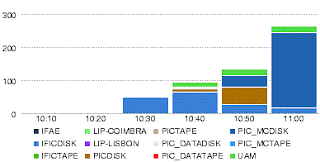

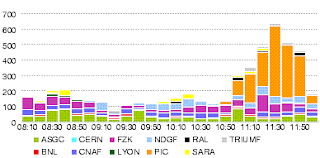

On the other hand, for the data transfers the delay was even shorter, after restarting the site services at CERN only took about 5 minutes to start triggering data in and out from PIC (pic.2 -files done- and pic.3-Throughput in MB/s-):

Due to the complexity of the system and the number of cross-dependencies for each of this single services that were successfully recovered one can conclude that the "re-start" was extremely successful :)... but of course everything can be improved !

On the other hand, for the data transfers the delay was even shorter, after restarting the site services at CERN only took about 5 minutes to start triggering data in and out from PIC (pic.2 -files done- and pic.3-Throughput in MB/s-):

Due to the complexity of the system and the number of cross-dependencies for each of this single services that were successfully recovered one can conclude that the "re-start" was extremely successful :)... but of course everything can be improved !

Subscribe to:

Posts (Atom)